A basic premise of collaborative mixed reality systems is that they enable mixed presence (collocated and remote) collaboration by effectively blending physical and virtual spaces. In this project we consider what it means to ‘effectively blend’ real and virtual for collaboration. We take a multi-pronged approach, combining infrastructure development, physical-virtual co-design, iterative prototyping and controlled experimentation.

IMRCE

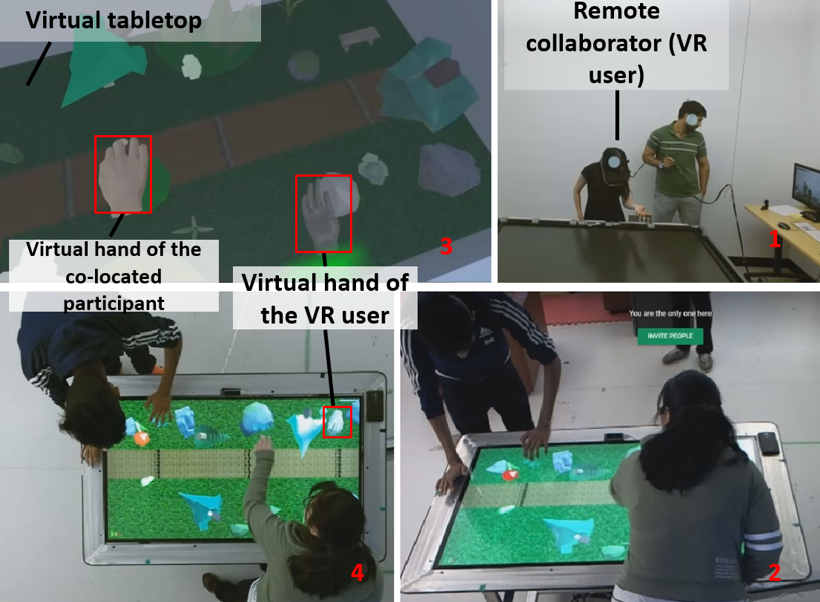

IMRCE is a lightweight, flexible, and robust Unity toolkit that allows designers and researchers to rapidly prototype mixed reality mixed presence (MR-MP) environments that connect physical spaces, virtual spaces, and devices. IMRCE helps collaborators maintain awareness of the shared collaborative environment by providing visual cues such as position indicators and virtual hands. At the same time IMRCE provides flexibility in how physical and virtual spaces are mapped, allowing work environments to be optimised for each collaborator while maintaining a sense of integration. The IMRCE toolkit was presented at SUI 2018.

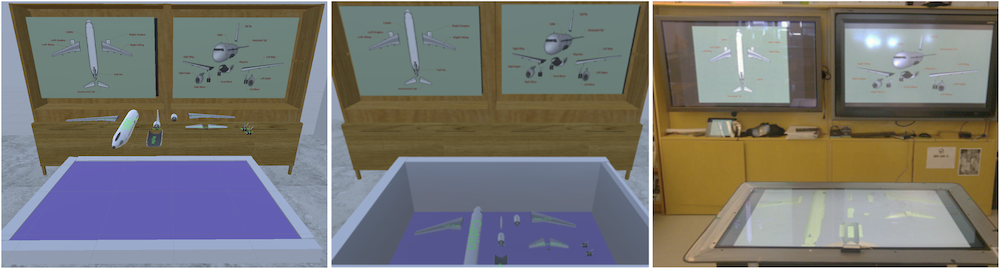

Using IMRCE, we considered an MR configuration in which collocated collaborators work around a tabletop display, while remote collaborators wear an HMD to interact with a connected virtual environment that gives a 3D perspective, and consider the impact of varying degrees of view congruence with their collaborators. In a within-subjects study with 18 groups of 3, groups completed task scenarios involving 3D object manipulation around a physical-virtual mapped tabletop. We compared a synchronized Tabletop display baseline and two MR conditions with different levels of view congruence: Fishtank and Hover. Fishtank had a high degree of congruence as it shares a top-down perspective of the 3D objects with the tabletop collaborators. The Hover condition had less view congruence since 3D content hovers front of the remote collaborator above the table. The MR conditions yielded higher self-reported awareness and co-presence than the Tabletop condition for both collocated and remote participants. Remote collaborators significantly preferred the MR conditions for manipulating shared 3D models and communicating with their collaborators. Full details are in our CSCW 2019 paper.

Mapping Physical and Virtual Actions

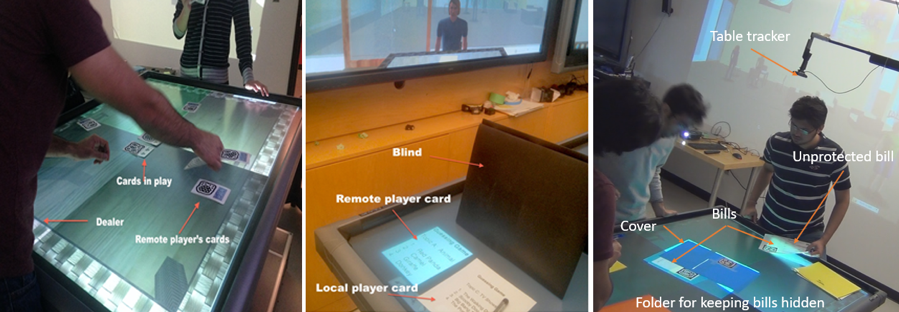

In a controlled study we consider two scenarios involving hiding and sharing blended physical- virtual documents around a tabletop, under two vertical display conditions: a solo display showing the remote collaborator as an avatar, and circumambient displays showing the rest of the connected virtual environment. We explore four types of cues (interactive, communication- based, ambient, and infrastructure) and their impact on how collaborators hide and share blended physical-virtual documents. Many participants did not obviously develop a sense that the physical and virtual surroundings were fused; as a result, certain physical privacy behaviours (e.g., orienting one’s body to shield documents from the remote collaborator) were less apparent in the study. However, the circumambient displays generated curiosity about how the spaces were connected, and episodes where breaches were enacted or the spatial correlation was otherwise suggested led some participants to trust the environment less. On the table, the presence of fiducial markers and digital document “shadows” served to cue participants about the impact of hiding and sharing physical documents, however accidental breaches usually went unnoticed. We present these results in our ISS 2016 paper.

We developed SecSpace, a software toolkit for usable privacy and security research in mixed reality collaborative environments. SecSpace permits privacy-related actions in either physical or virtual space to generate effects simultaneously in both spaces. These effects will be the same in terms of their impact on privacy but they may be functionally tailored to suit the requirements of each space. We detail the architecture of SecSpace and present three prototypes that illustrate the flexibility and capabilities of our approach in our EICS 2014 paper.

TwinSpace

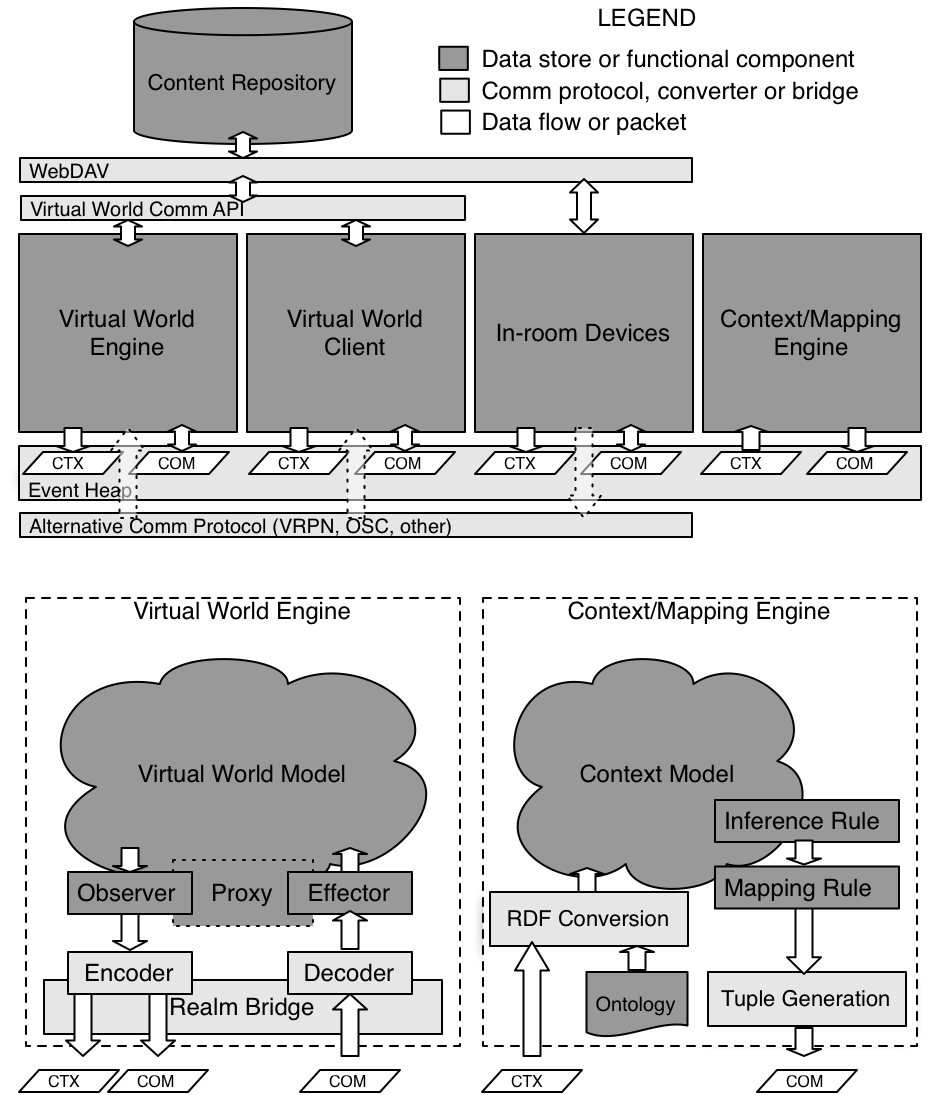

TwinSpace is an infrastructure for collaborative mixed reality built on top of a desktop virtual environment that supports document sharing and editing. It provides a robust platform for rapid prototyping of applications that span physical and virtual spaces, allowing for comparative evaluation of designs. The system is applicable to a wide range of physical settings, virtual environments, and collaborative activities. TwinSpace provides the following four key features:

TwinSpace is an infrastructure for collaborative mixed reality built on top of a desktop virtual environment that supports document sharing and editing. It provides a robust platform for rapid prototyping of applications that span physical and virtual spaces, allowing for comparative evaluation of designs. The system is applicable to a wide range of physical settings, virtual environments, and collaborative activities. TwinSpace provides the following four key features:

- A communications layer that seamlessly links the event-notification and transactional mechanisms in the virtual space with those in the physical space.

- A common model for both physical and virtual spaces, promoting the interoperability of physical and virtual devices, services and representations.

- A mapping capability, managing how physical and virtual spaces are connected and synchronized.

- Specialized virtual world clients that participate fully in the larger ecology of collaborative mixed reality devices and services.

TwinSpace has been used in several research projects; most are outlined below.

inSpace Lab

The inSpace Lab is an experimental environment modelled after the project room, a room that provides a physical home for a project (its members, activities, tools, and products) over its lifespan. The lab is designed to utilize the spatial layout and ‘collaboration affordances’ of the physical space in order to connect with a complementary virtual space.

The inSpace lab is composed of three main regions (see figure). Region A supports brainstorming and generating new content on a whiteboard, region B supports extended group discussions and sharing content on wall displays, and region C supports composing, modifying, and discussing content over an interactive tabletop display.

The inSpace lab is composed of three main regions (see figure). Region A supports brainstorming and generating new content on a whiteboard, region B supports extended group discussions and sharing content on wall displays, and region C supports composing, modifying, and discussing content over an interactive tabletop display.

inSpace serves as our primary testing ground for mixed reality interaction techniques, physical-virtual co-design, policies for privacy, sharing mechanisms, and physical-virtual mappings.

Or de l’Acadie

Or de l’Acadie is a prototype collaborative game built using TwinSpace. Set in an abandoned 18th Century French colonial fort, two pairs (French and British sides) compete for valuables distributed throughout the town, with the goal to collect the most valuables within a time limit.

The game emphasizes asymmetry in both game controls and team dynamics. Each British player controls a single soldier using keyboard controls. Players are not collocated, and can communicate with each other only when their soldiers are within earshot. Each soldier is equipped with a static top-down map of the fort, and can collect a limited number of riches before needing to return to a location at the edge of the fort to stash them.

The French side consists of a Mi’kmaq mystic who is able to communicate with spirits, and a deceased French Gendarme. The French players are physically collocated, and use a range of physical controls to compete for the valuables (see figure).

Virtual Supermarket

This project implemented a mixed reality solution to the design contest held at the 3DUI conference in 2010. Items were selected from virtual supermarket shelves by moving a physical trolley around a carpet that was mapped to the supermarket floor. The touchscreen on the trolley presented the first-person perspective of the shopper.

Each selected item was dynamically associated with a physical token. When it was time to ‘check out’, the physical tokens were carried to a physical table/display. The display presented the surface of a corresponding virtual table. When tokens were placed on the physical table, the associated items appear on the virtual table at the same position and orientation.

2019

Immersed and Integral: Relaxing View Congruence for Mixed Presence Collaboration With 3D Content Proceedings Article

In: Proceedings of CSCW 2019, The 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing, ACM, 2019.

2018

IMRCE: A Unity Toolkit for Virtual Co-Presence Conference

Proceedings of the 6th ACM Symposium on Spatial User Interaction (SUI 2018), ACM, Berlin, Germany, 2018.

2016

Physical-Digital Privacy Interfaces for Mixed Reality Collaboration: An Exploratory Study Conference

Proceedings of the ACM Conference on Interactive Surfaces and Spaces (ISS 2016), ACM, Niagara Falls, Canada, 2016.

2015

Mapping out work in a mixed reality project room Conference

Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI'15), ACM, Seoul, Republic of Korea, 2015, ISBN: 978-1-4503-3145-6.

2014

SecSpace: Prototyping Usable Privacy and Security for Mixed Reality Collaborative Environments Conference

6th ACM SIGCHI Symposium on Engineering Interactive Computing Systems (EICS 2014), Rome, Italy, 2014.

2013

Document-Centric Mixed Reality and Informal Communication in a Brazilian Neurological Institution Conference

CSCW 2013, 2013.

2011

Organic UIs in Cross-Reality Spaces Conference

Second International Workshop on Organic User Interfaces, TEI 2011, 2011.

Tangible Navigation and Object Manipulation in Virtual Environments Conference

Proceedings of the Fifth International Conference on Tangible, Embedded and Embodied Interaction (TEI '11), Funchal, Portugal, 2011.

Toward a Framework for Prototyping Physical Interfaces in Multiplayer Gaming: TwinSpace Experiences Conference

Proceedings of International Conference on Entertainment Computing (ICEC 2011), vol. 6972, LNCS Springer, 2011.

2010

TwinSpace: an infrastructure for cross-reality team spaces Conference

In Proc. ACM Symposium on User Interface Software and Technology (UIST '10), ACM, New York, USA, 2010.

A Tangible, Cross-Reality Supermarket interface Conference

3DUI 2010, 2010.

Or de l'Acadie: a TwinSpace demo Conference

UIST 2010, 2010.

Space Matters: Physical-Digital and Physical-Virtual Co-Design in the Inspace Project Journal Article

In: IEEE Pervasive Computing, vol. 9, no. 3, pp. 54–63, 2010.